Bringing it all together

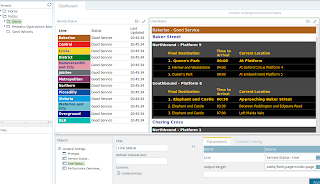

This is the third article in a trilogy on using Pentaho Business Analytics to do Business Intelligence on real-time data sources in next to no time. The first two articles cover using Pentaho Report Designer reports in combination with Pentaho Data Integration transformations to provide summary and detail level reports in a dashboard. The details of how to create these are covered in part I and part II.Lets take a look at what I'm aiming for at the end of this article:

This shows the summary report covered in Part I, the detail level report from Part II, and whats that on the far right? This is a Pentaho Enterprise Edition Analyzer view running on Mondrian, and in this instance reporting off a Cassandra database being updated every 60 seconds, so that's real-time (well to the nearest 60 seconds) OLAP analysis!

This example is a huge over simplification of what is possible using the technology, but a crucial component here is Pentaho Data Integration's data blending technology. The outcome of this is that we can use a NoSQL database as a data source for Mondrian via JDBC.

There are three transformations behind this:

- Read the API and load the data into Cassandra

- Clear the Mondrian Data cache

- Read from Cassandra and make the data available over JDBC

Reading the TfL API and putting the results into Cassandra

The first part is to get some data, this reuses the basic API call and XML parsing from Part I, in this case I'm also capturing the status details field which I also want to store in my Cassandra database for possible future enhancement (stay tuned). I also separate the time and date elements to make the Cassandra Key.

There is really very little to configure to write the data into Cassandra, just configure details like the column family (think wonky table), specify the fields to use as a key and that is about it.

Clear the Mondrian Cache

When we make new data available within Cassandra and we're querying via Mondrian we need some way of indicating that the cache needs to be updated. There are a couple of ways to achieve this, including telling the data model not to cache the data, but in this case I'll take the nuclear option and blow away the cache after loading new data. This can be done via an API call in version 5 of Pentaho, so I used a HTTP Client step in PDI.

I just used a simple job set to repeat on a 60 second loop to run these two transformations.

Now for the Magic

So how do we use a traditionally SQL based technology on a NoSQL database? This is where we can use one of the new features of Pentaho Business Analytics 5.0, data blending. In effect what this allows a developer to do is create a data transformation using the steps available in PDI and make the resulting data stream available for SQL querying via a thin JDBC driver. The transformation is stored in the enterprise repository and the data made available via the Data Integration Server, details are available in the Pentaho Wiki on how to do this. The transformation in this instance could not be simpler, read the data with a Cassandra input step:

So that's it! Real time BI with a combination of reports with drill down and real time OLAP style analytic reporting.

If you are interested in a one-on-one demo look me up at one of the trade fairs that I regularly attend for #Pentaho. Keep up to date on when I'll be about by following me on twitter @MarkdMelton.

add a constant of 1 as the "count" of the number of entries to aggregate up in Mondrian, and then use a step to act as the "output" in this case a select values step.

The final step in the transformation is to use the Data Service tab in the transformation settings. This associates a data service name with the data stream from a transformation step.

The easiest way to get at the data is to use the data source wizard in the Pentaho User Console, but before doing this you need to create a new JDBC connection (you can do this in one step, it;s just cleaner to explain in two). The JDBC driver needs to use the custom driver type, and a custom connection URL.

For the custom connection URL I used:

jdbc:pdi://localhost:9080/kettle?webappname=pentaho-di

and for the driver:

org.pentaho.di.core.jdbc.ThinDriverThe final step was to create a new data source in the data source wizard, I created a simple analysis view and then added a very quick and easy stack column visualization on an analyzer report. Add the new report into the dashboard created in Part I, set the refresh interval as appropriate and you're done.

So that's it! Real time BI with a combination of reports with drill down and real time OLAP style analytic reporting.

Where Next?

This has been an interesting quick and easy experiment but has by no means reached the limit of whats possible. There are three paths that I might pursue in future posts, advanced visualizations, text search or predictive analytics. The visualization options could by something like a Force Directed Layout showing the stations and relative locations of the trains as a network graph. Another alternative would be a version of the Live London Underground map. The text search could be an interesting option to search for particular issues or performance problems, possibly linked with a twitter search? Finally another option is to use WEKA to do some predictive analytics on historic data and influencing factors on performance, and build a predictive engine to answer questions such as "It's raining, it's 08:15 on a Monday morning I'm at Kings Cross and I need to get to Angel, should I just walk?". The answer to that question will almost certainly be yes in every instance, but you get the idea.If you are interested in a one-on-one demo look me up at one of the trade fairs that I regularly attend for #Pentaho. Keep up to date on when I'll be about by following me on twitter @MarkdMelton.